251024 Deep fakes

The following information (all from reputable sources, with credit given to each) may well help you keep life and finances secure, IF you pay attention to the advice given here. Be vigilant in your day-to-day computer use, received email, text messages and even phone calls. Any one of these could be an effort to scam you.

Ideas Made to Matter, Deepfakes, explained by Meredith Somers

From https://mitsloan.mit.edu/ideas-made-to-matter/deepfakes-explained

Jul 21, 2020

Why It Matters

Deepfakes can be used to manipulate and threaten individuals and corporations. But with a better understanding of the technology, executives can take steps to protect themselves and their companies.

In March 2019, the CEO of a U.K-based energy firm listened over the phone as his boss — the leader of the firm’s German parent company — ordered the transfer of €220,000 to a supplier in Hungary.

News reports would later detail that the CEO recognized the “slight German accent and the melody” of his chief’s voice and followed the order to transfer the money [equivalent to about $243,000] within an hour. The caller tried several other times to get a second round of money, but by then the U.K. executive had grown suspicious and did not make any more transfers.

The €220,000 was moved to Mexico and channeled to other accounts, and the energy firm — which was not identified — reported the incident to its insurance company, Euler Hermes Group SA. An official with Euler Hermes said the thieves used artificial intelligence to create a deepfake of the German executive’s voice, though reports have since questioned the lack of supporting evidence.

What’s for certain, however, is that the technology for this type of crime does exist, and it’s only a matter of when the next attack will happen and who will be the target.

“It’s a time to be more wary,” said Halsey Burgund, a fellow in the MIT Open Documentary Lab. “One should think of everything one puts out on the internet freely as potential training data for somebody to do something with.”

The manipulation of data is not new. Ancient Romans chiseled names and portraits off stone, permanently deleting a person’s identity and history. Soviet leader Joseph Stalin used censorship and image editing to control his persona and government in the early-mid 20th century. The advent of the computer age meant a few clicks of a mouse could shrink a waistline or erase someone from a photograph. Data manipulation today still relies on computers, but as the incident with the energy firm shows, the human voice — and, increasingly, video clips — are being used as a way to convince someone that what they’re hearing or seeing is real.

And while there might be an argument for using a deepfake for good, experts warn that without an understanding of them, a deepfake can wreak havoc on someone’s personal and professional life.

What is a deepfake?

A deepfake refers to a specific kind of synthetic media where a person in an image or video is swapped with another person’s likeness.

The term “deepfake” was first coined in late 2017 by a Reddit user of the same name. This user created a space on the online news and aggregation site, where they shared pornographic videos that used open-source face-swapping technology.

The term has since expanded to include “synthetic media applications” that existed before the Reddit page and new creations like StyleGAN — “realistic-looking still images of people that don’t exist,” said Henry Ajder, head of threat intelligence at deepfake detection company Deeptrace.

In more recent examples, deepfakes can be a voice that sounds like your boss on the other end of a phone line, Facebook’s Mark Zuckerberg in an edited video touting how great it is to have billions of people’s data, or Belgium’s prime minister linking the coronavirus pandemic to climate change during a manipulated recorded speech.

“The term understandably has a negative connotation, but there are a number of potentially beneficial use cases for businesses, specifically applications in marketing and advertising that are already being utilized by well-known brands,” Ajder said.

That’s why a growing number of people in this space are instead using the term “artificial intelligence-generated synthetic media,” Ajder said. It’s broad enough to include the original definition of deepfake, but also specific enough to omit things like computer generated images from movies, or photoshopped images — both of which are technically examples of something that’s been modified.

The next bit of information comes directly from Wikipedia, the free encyclopedia:

“Face recognition” redirects here. For the human cognitive process, see face perception. For other uses, see facial recognition.

Facial recognition software at a US airport

Automatic ticket gate with face recognition system in Osaka Metro Morinomiya Station

A facial recognition system[1] is a technology potentially capable of matching a human face from a digital image or a video frame against a database of faces. Such a system is typically employed to authenticate users through ID verification services, and works by pinpointing and measuring facial features from a given image.[2]

Development began on similar systems in the 1960s, beginning as a form of computer application. Since their inception, facial recognition systems have seen wider uses in recent times on smartphones and in other forms of technology, such as robotics. Because computerized facial recognition involves the measurement of a human’s physiological characteristics, facial recognition systems are categorized as biometrics. Although the accuracy of facial recognition systems as a biometric technology is lower than iris recognition, fingerprint image acquisition, palm recognition or voice recognition, it is widely adopted due to its contactless process.[3] Facial recognition systems have been deployed in advanced human–computer interaction, video surveillance, law enforcement, passenger screening, decisions on employment and housing and automatic indexing of images.[4][5]

Facial recognition systems are employed throughout the world today by governments and private companies.[6] Their effectiveness varies, and some systems have previously been scrapped because of their ineffectiveness. The use of facial recognition systems has also raised controversy, with claims that the systems violate citizens’ privacy, commonly make incorrect identifications, encourage gender norms[7][8] and racial profiling,[9] and do not protect important biometric data. The appearance of synthetic media such as deepfakes has also raised concerns about its security.[10] These claims have led to the ban of facial recognition systems in several cities in the United States.[11] Growing societal concerns led social networking company Meta Platforms to shut down its Facebook facial recognition system in 2021, deleting the face scan data of more than one billion users.[12][13] The change represented one of the largest shifts in facial recognition usage in the technology’s history. IBM also stopped offering facial recognition technology due to similar concerns.[14]

- Face — Is someone blinking too much or too little? Do their eyebrows fit their face? Is someone’s hair in the wrong spot? Does their skin look airbrushed or, conversely, are there too many wrinkles?

- Audio — Does someone’s voice not match their appearance (ex. a heavyset man with a higher-pitched feminine voice).

- Lighting — What sort of reflection, if any, are a person’s glasses giving under a light? (Deepfakes often fail to fully represent the natural physics of lighting.)

The best way to inoculate people against deepfakes is exposure, Groh said. To support and study this idea, Groh and his colleagues created an online test as a resource for people to experience and learn from interacting with deepfakes.

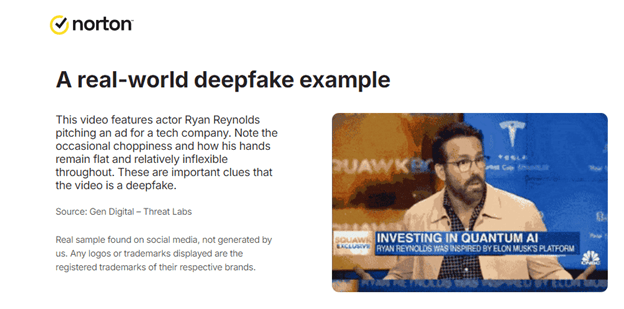

A graphic from “My Norton” recently sent to my computer.

The picture with Ryan Reynolds is actually a short movie snippet that I was not able to add to the text of the movie or gif.

Take this information seriously as the potential impact on you and your family could be serious.